● Custom Business Copilot AI Chatbot;

● Available 24/7 to Enhance Support Experience;

● Personalized Training with Customized Knowledge;

● Instant Answers to Your Enterprise-specific Questions;

● No Coding Required to Support Multiple Languages.

Learn More

Start for Free

How Answers are Generated by ChatInsight?

In the first experience with ChatInsight, it is possible that you may feel disappointed or frustrated that it doesn't generate the responses you are hoping for. In this brief explanation, we will provide a general concept of how ChatInsight analyzes your documents to generate responses rather than a guide on using ChatInsight. This will help you grasp the generation process and experiment with it more effectively.

There are a few factors that impact the generation of the answer:

● Chunking

● Token Distribution

● Advance Settings

All these factors are interrelated. We will explain Chunking and Token Distribution here, and you can learn the advanced settings in this link.

Think of these factors in this way:

Imagine you are a manufacturer selling your products in a shop. In this shop, customers can only check out one cart at a time. Here, Chunking represents the size of each product, determining how they are divided and organized. On the other hand, Token Distribution determines the cart's capacity, dictating how much can be included in each checkout.

In this scenario, AI acts as the customer, deciding what to include for each checkout based on the specific queries it receives.

What is Chunking?

Let's keep it simple. Chunking is the action of the AI analyzing your documents and assigning each document its relevance value. When analyzing, AI will also break down the documents according to the relationship of the contents for better resource management and answers. Imagine you have a small cart and have to put a sofa in it; you can only disassemble it to achieve your goal.

As the process of Chunking will heavily influence the content of the answer, it is important to optimize your knowledge base for the process to have a better result. Just remember, AI is just like humans; the easier the content can be read, the better the answer will be, including the rule of utilizing every information. For further information, please check this article.

What is the Role of Token Distribution in answer generation?

There are various information needs to be included when sending a question to the AI model, and the answer to the question also consumes the tokens for one inquiry. As a result, how the token is distributed heavily influences the answer from your bot.

Since tokens for every inquiry are limited, here is some additional information. First, how you distribute the token doesn't mean the bot will use all of them every time you send an inquiry. When the bot decides the answer only needs to be short, how many tokens you assign does not affect the answer. However, if all tokens are consumed, the answer will be cut. As a result, you will need to consider how you will use the bot and distribute the token accordingly.

Examples

Here are some examples of token distribution settings:

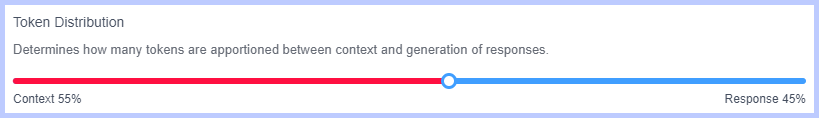

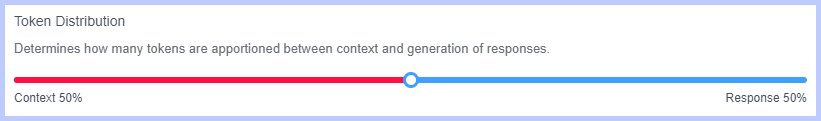

● Balanced(50% Context to 50% Response)

The balanced default distribution can handle most of the user scenarios. This setting can include around five medium-length references, which contain around 500 words in each chunk, and the bot can generate a full-length article of more than a thousand words.

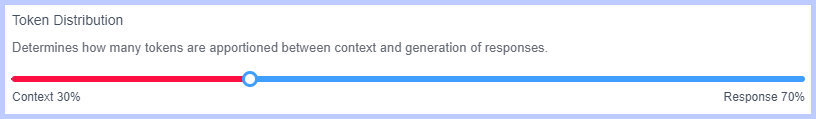

● Detailed and extended answer (30% Context to 70% Response)

This setting can support a super long answer if that is what you need. The LLM model can analyze some complex references and extend the content with more comprehensive and readable sentences to generate a more understandable answer. Just beware, you might need to write the prompt properly to guide the AI to form your desired answer. Otherwise, the length of the answer might not meet your requirement since the tokens for the context is not a lot.

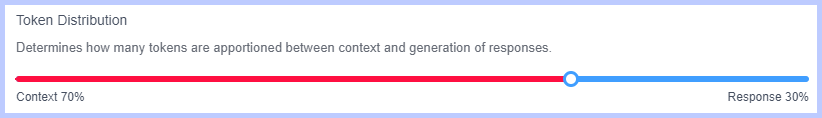

● Summarizing holistic context (70% Context to 30% Response)

With this distribution, the bot can include all references as long as they meet the lowest relevance score to form a holistic summarizing. Just a reminder, you also have to write a proper prompt to make sure the bot only generates a short and clear answer based on all those references. If the bot is not able to complete the answer within the distributed tokens, the answer will not be completed.

Still need help? Submit a request >>